Assessing the Functionality and User Experience of AI Assistant's Continuous Dialogue Feature through Survey

@Xiaomi Techonology

Introduction to XiaoAI - AI Assistant's Continuous Dialogue Feature

XiaoAI is an intelligent virtual assistant that is suitable for multiple devices created by Xiaomi Technology Company. XiaoAI can provide services similar to Siri and Alexa, but it has a much broader range of functions. My UX research team focused primarily on XiaoAI in the Smart Phone Platform.

Continuous Dialogue is a function of XiaoAI launched one year ago. While AI assistants normally disengage after reacting to a single query, XiaoAI can be set to listen continuously without additional user activation. Each new conversation links to some extent to previous conversations.

Project Overview

Background

Continuous Dialogue is a feature that allows XiaoAI to continually listen and react to the user even after one conversation.

Project Goals

Our UX team wanted to evaluate the actual user experience of the function and determine if there are further development needs.

My Contributions

-

Communicated with PMs, Executives, Engineers, and other UX researchers

-

Designed survey

-

Mapped user flow of the survey

-

Explored and analyzed the data

-

Generated reports and slides

Timeline

October 2020 - November 2020, 9 weeks

Key Findings

According to the Kano model, continuous dialogue function is a one-dimensional function, meaning that increased functionality will increase user satisfaction.

The main influence factor of experience is AI assistants' incoherent conversation with users.

Key Recommendation

The product team may consider improving dialogue fluency.

Improving Acoustic Echo Cancellation (AEC) and Voice Interruption Functionality is also future development direction.

Background

-

The database metrics for Continuous Dialogue usage (including DAU, multi-round conversations, user retention rates, etc.) showed positive results.

-

Recognizing the limitations of data-driven insights, there's a need to delve into users' subjective experiences for a comprehensive understanding.

-

The product team needs real user feedback to understand the Continuous Dialogue feature better and decide priorities in developing the feature.

-

This project involved close collaboration mainly with a UX researcher, a product manager, and our team lead to navigate its intricacies.

Key Questions

-

What is the perceived value proposition of the Continuous Dialogue Feature among users?

-

How do users describe their experience with the Continuous Dialogue Feature?

-

To what extent does the feature encourage users to engage with the AI assistant, and in which specific domains or tasks?

-

What strategies can be implemented to enhance user guidance within the Continuous Dialogue Feature?

Challenges

-

Collecting feedback from different types of users in a single survey, even though not all questions apply to everyone.

-

Creating survey questions that cover a wide range of aspects.

-

Conducting complex exploratory data analysis to uncover insights.

-

Addressing additional questions from our project stakeholders regarding our research report.

Method

-

To gain insights from a large number of users and collect statistical metrics related to them, our research team decided to use a survey as the primary tool.

-

We quickly realized that a single set of questions wouldn't suit all users, so I worked alongside our User Experience Research (UXR) leader to categorize users into five groups:

1. Users who don’t know Continuous Dialogue.

2. Users who know it but never use it.

3. Users who used it before.

4. Users who currently use it.

5. Users who can’t remember clearly.

-

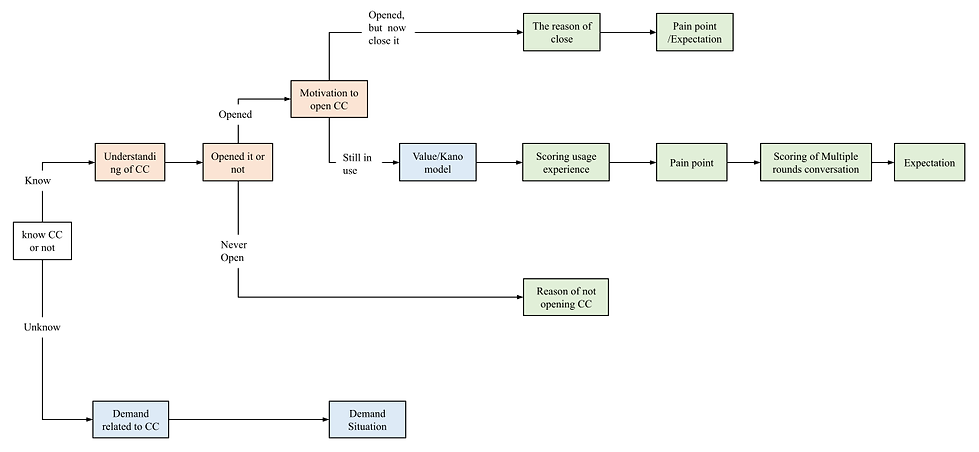

After operationalizing the types of users, I designed questions for the users based on their usage status and drew a flow chart of the Survey in collaboration with stakeholders (Figure 1).

Figure 1: Flow chart of the Survey

Note. Some details have been simplified due to presentation purposes; CC refers to the Continous dialogue function

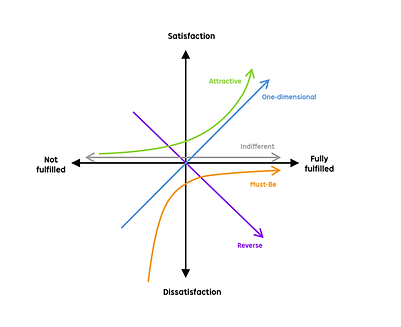

Figure 2: Kano Model

-

The types of survey questions include:

-

Multiple-choice questions

-

5-point rating scale questions

-

Open-ended questions

-

-

To address the challenges of understanding the value of Continuous Dialogue, I introduced the Kano Model. The Kano Model classifies customer preferences for product functions into five categories (see Figure 2)

-

For instance, regarding must-be quality, when done well, customers remain neutral, but when done poorly, customers become very dissatisfied.

-

-

I improved the surveys through several revisions and got confirmation from stakeholders to align with their objectives. Afterward, I distributed the survey by sending our survey link to over 600,000 AI assistant users, resulting in over 3,000 effective user feedback responses.

Figure 3: Percentage of Feature Users by Type

(Certain information is obscured for confidentiality.)

Results Analysis

Exploratory data analysis

-

I conducted extensive exploratory data analyses using Microsoft Excel and SPSS to explore the relationships between various phenomena. Here are the types of analyses I performed:

-

Data cleaning

-

Frequency and crosstabs

-

Comparing groups (t-test, F-test)

-

Regression analysis

-

-

For a specific example of research findings, I segmented users into two categories based on users’ perceived feature understanding:

-

One are those perceived the feature as supporting contextual coherence

-

And the other consisted of users who believed the feature required no further activation to communicate.

-

-

I then looked at how their understanding is related to net promoter scores.

-

The analysis revealed a significant difference in NPS between these two groups, with the contextual coherence supporters showing notably lower NPS scores compared to the other group, regardless of whether they are retained or churned users.The contextual coherence group also has lower scores in several other aspects compared to the one-time activation group. (see Figure 4).

-

Figure 4: Analysis Example of Perceived Feature Understanding

(Certain information is obscured for confidentiality.)

Text analysis

We analyzed query& TTS (text-to-speech), users’ feedback in opened-questions of users.

-

Organized good cases & bad cases of Continuous Dialogue query &TTS based on whether the AI processed and served the user's demands correctly.

-

Analyzed user feedback in the open-ended questions into quantitative types (only recognizing AI's own voices, contextual incoherence, etc.).

Crucial insights

-

Continuous Dialogue as a One-dimensional Feature: Continuous Dialogue is a one-dimensional feature for retained users. It plays a pivotal role in user satisfaction when fulfilled and causes dissatisfaction when not fulfilled.

-

Positive Impact on User Experience: Following the implementation of Continuous Dialogue, a significant majority of users reported an enhanced user experience with the AI assistant. Their interactions with the AI assistant also showed an increase in engagement.

-

Expectation of Contextual Coherence: Many users had high expectations that the Continuous Dialogue feature would enable contextual coherence in their conversations. They anticipated that the AI assistant could understand the previous context of dialogue, leading to lower user satisfaction because the feature is not designed to achieve this.

-

Major pain points: The primary issues associated with the Continuous Dialogue feature included contextual incoherence and inaccurate detection of environmental sounds.

Recommendations

→Shift Focus to Dialogue Process: Instead of concentrating solely on single Q&A interactions, future development efforts should emphasize enhancing and evaluating the overall experience of the whole dialogue process.

→Improve Acoustic Echo Cancellation (AEC) and Voice Interruption Functionality: Consider prioritizing the improvement of the Acoustic Echo Cancellation (AEC) technology to enhance the quality of audio during interactions. Additionally, considering the option to allow users to turn off the voice interruption function could provide more flexibility and user control in managing their interactions with the AI assistant.

Research Impact

-

Created detailed written reports and presentation slides.

-

Uncovered user expectations and pain points that challenged stakeholders' assumptions.

-

Offered clear, prioritized development guidance for the Continuous Dialogue feature and AI assistant to product managers and developers.

-

Strengthened collaboration with the product team, making the UX research team a critical partner.

-

Developed new strategies and company-wide processes for stakeholder collaboration.

-

Expanded influence across the AI department, with the UI team seeking our input on UX research.

-

Gained valuable experience by focusing on researching a single product function.

My learning

-

Maintaining a record of all stakeholder meetings is crucial for researchers to review stakeholder requests and ensure that stakeholders remain accountable for their requests throughout the research process.

-

User experience is also influenced by the user's expectations of the product. Managing or altering these expectations can impact the user experience. In some cases, even the function's name can lead to misleading expectations.

-

The more researchers involve stakeholders at each stage of the research process, the more willing stakeholders are to act on UX research findings.

-

What I would do differently: Consider using standardized questions for various user groups to enable easy comparisons.